The Reality of AI Video: A No-Bullshit Guide

Hey friends - Today we're diving into something I've been looking into for a while now: generating realistic videos using AI tools.

In this guide, I'm cutting through the hype to show you exactly what AI video generation can and can't do right now, plus a proven workflow to create multi-scene videos with consistent characters. Let's get started!

Watch it in action

Resources

- Tool's mentioned: Flow, Whisk, Google AI Studio, ElevenLabs, Midjourney

- Prompts mentioned: Access them here

- My Veo Visionary Gemini Gem (to generate video prompts)

- Click here for my free AI Toolkit

Why AI Can't Make Movies (yet)

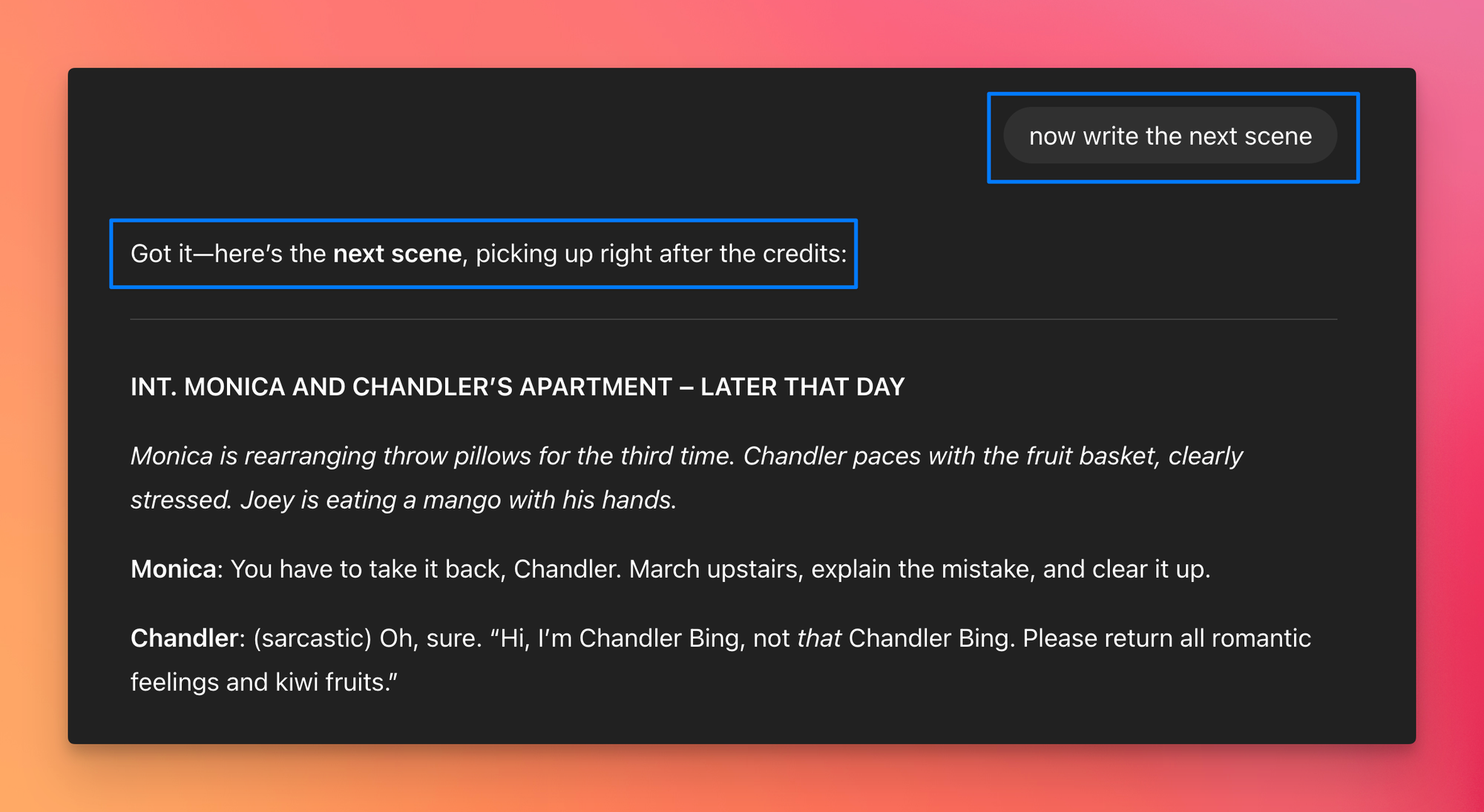

Here's a simple analogy. When you ask ChatGPT to write the opening scene of a TV show and then ask it to write the next scene in the same conversation, it remembers the characters, setting, and narrative. Everything stays consistent.

Video AI models (like Google's Veo models) work completely differently. They have zero memory between generations. Even if you use the exact same prompt to describe a character, the model will generate a slightly different version each time, breaking the consistency between scenes.

This fundamental limitation is why, despite the impressive capabilities of current AI video tools, we can't simply prompt our way to a full movie or professional YouTube video.

What AI Video Can Actually Do Right Now

Current AI video models are incredibly powerful for single shots. Tools like Google's Flow app can create stunning, detailed clips with realistic movement, effects, and even synchronized audio. The quality of individual clips has reached a point where they're often indistinguishable from professional footage.

The problem emerges when you try to create sequential scenes. Without proper techniques, your perfectly rendered Darth Vader in scene one becomes a cheap knockoff in scene two, with different voice, different setting, and different visual style.

The Four-Step Workflow for Consistent AI Videos

Here's the exact workflow to overcome the consistency challenge and create multi-scene videos with the same character throughout.

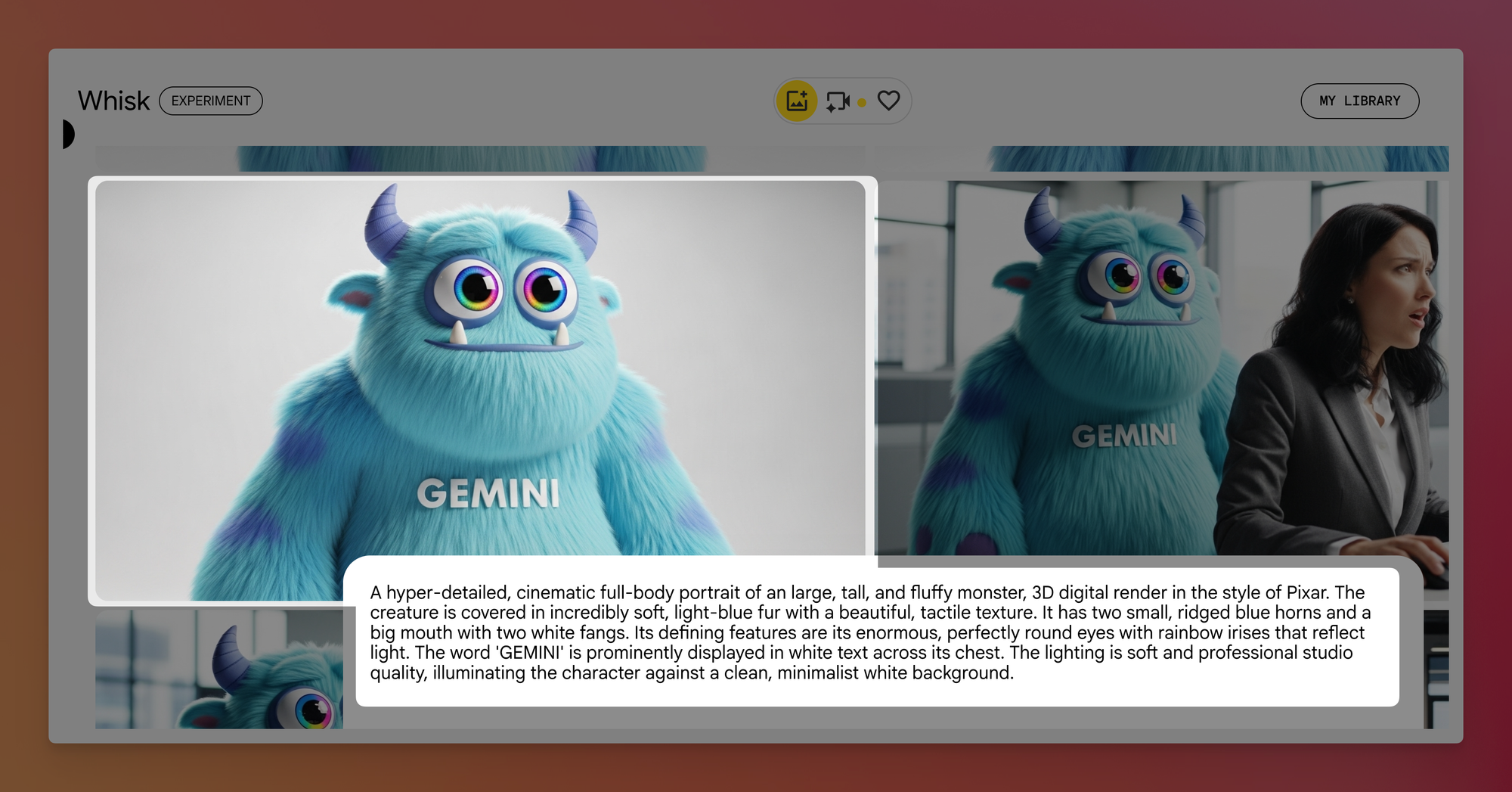

Step 1: Create Your Main Character as a Static Image

Counter-intuitively, the first step in creating AI video is generating a static image. This becomes your character reference that ensures consistency across all scenes.

Using a free tool like Google's Whisk:

- Paste in a detailed character prompt

- Disable "Precise Reference" in settings to give the AI creative freedom

- Generate multiple versions until you get one with minimal flaws

- Use the refine feature with "Precise Reference" enabled to make specific adjustments

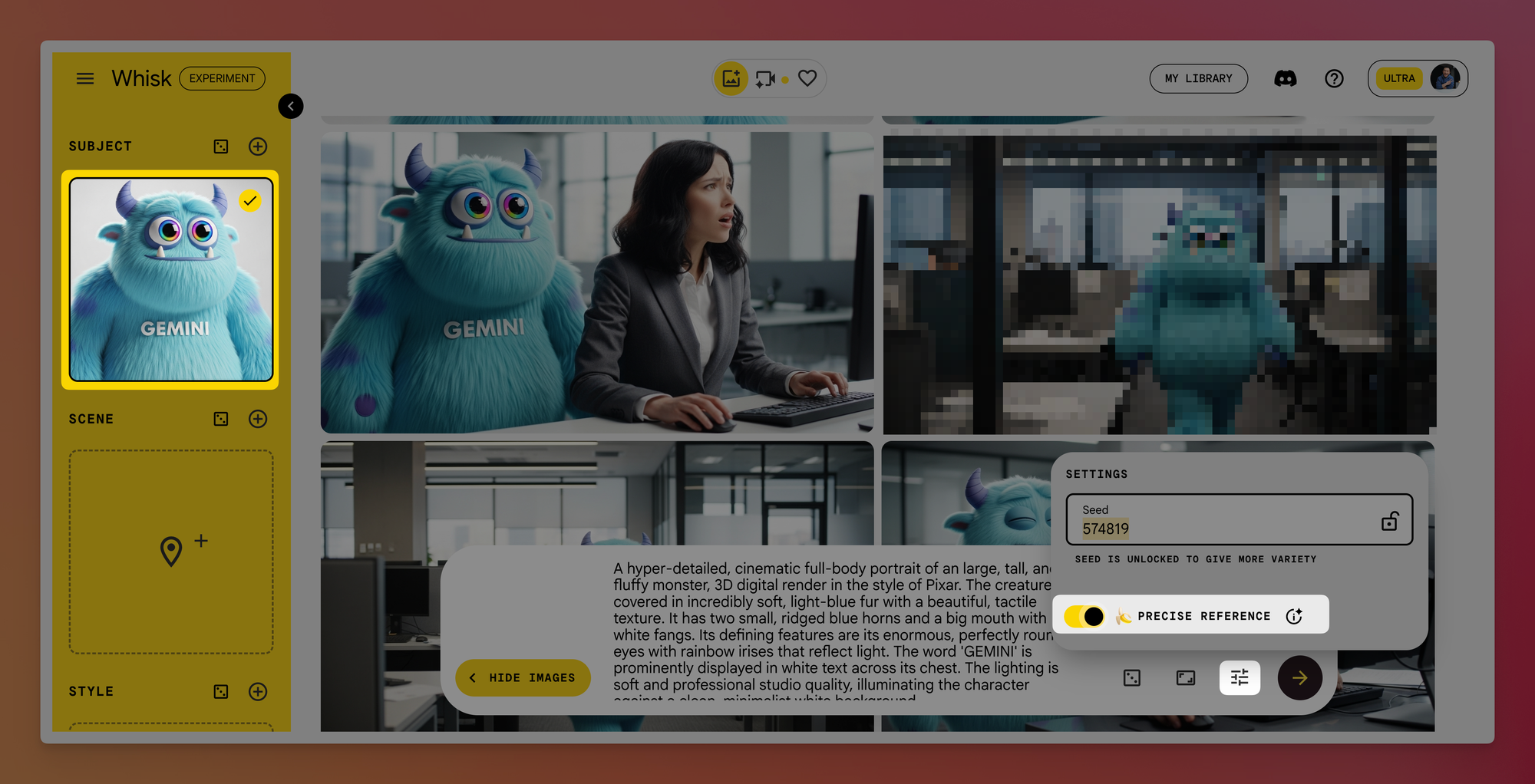

Step 2: Create Starting Frames for Each Scene

Now you'll place your character into different scenes that will become your video clips:

- Upload your character image as the "Subject" in Whisk

- Enable "Precise Reference" in settings

- Generate a still image for each scene you want to create

- The character will remain visually consistent across all starting frames

This step is critical. Without using your character as a subject reference, the AI will generate a completely different character each time, even with identical text prompts.

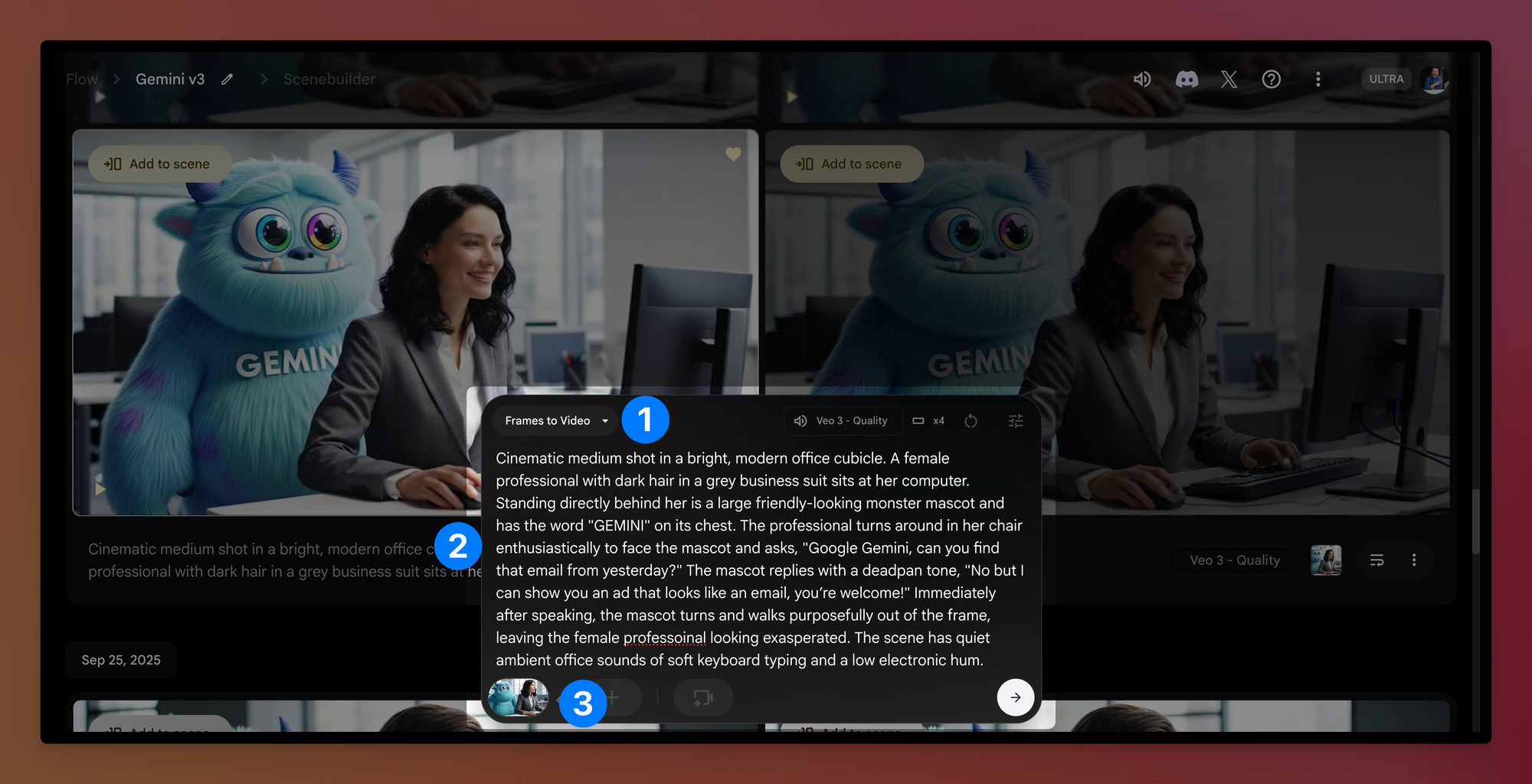

Step 3: Generate Videos from Your Starting Frames

With your consistent starting frames ready, head to Google's Flow app:

- Select "Frame-to-video" option

- Upload your starting frame

- Write a detailed prompt describing the action and dialogue

- Generate multiple outputs (4 recommended) to increase chances of getting a usable clip

- Download the best version from each batch

The key here is that by starting from your consistent character image, the video maintains visual consistency even as the scene plays out.

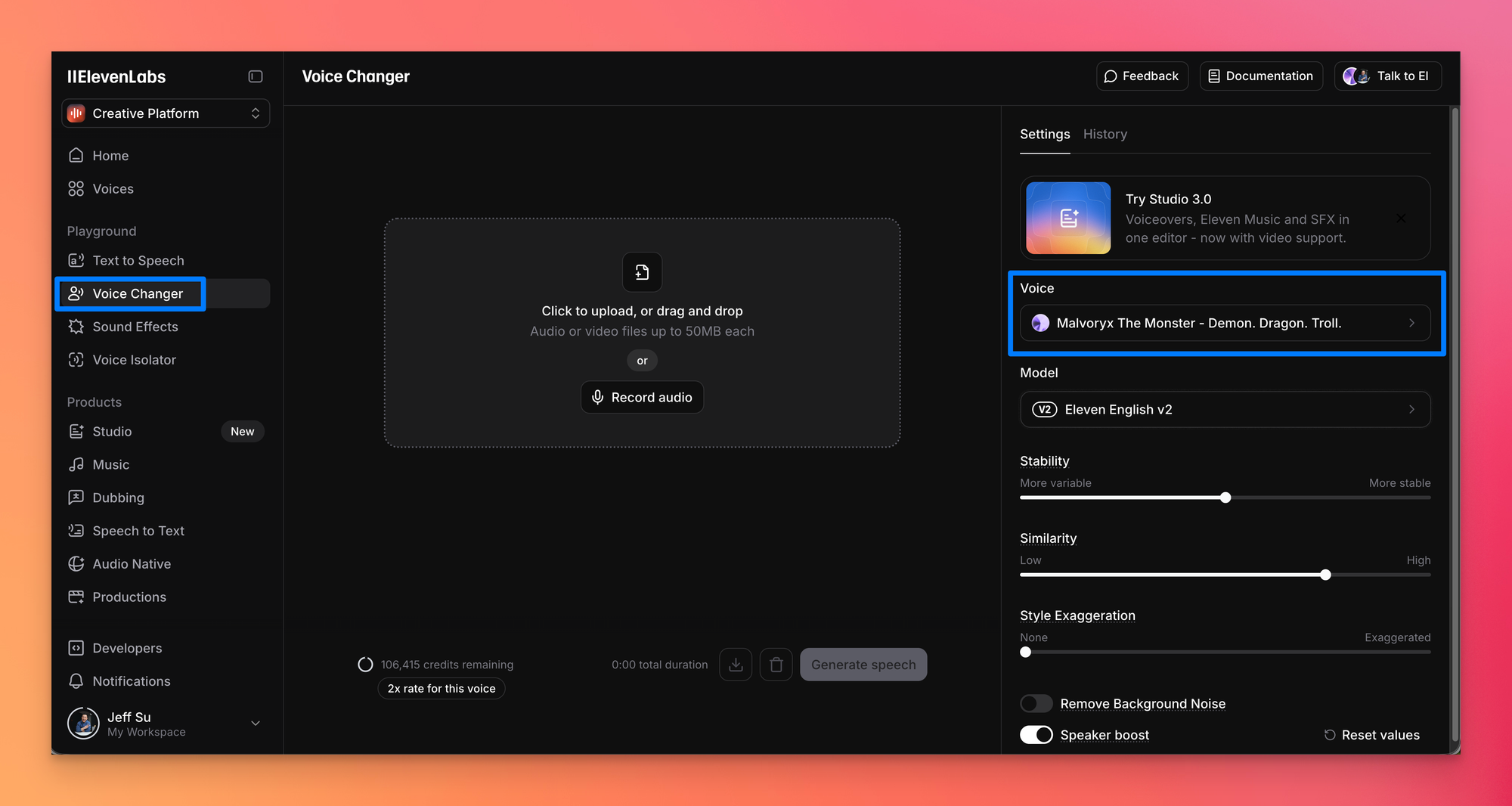

Step 4: Achieve Audio Consistency with Voice Cloning

Even with visually consistent characters, each video clip will have different voices. To fix this:

- Upload your video clips to ElevenLabs' Voice Changer

- Select the same voice for your character across all clips

- Generate new audio files with the consistent voice

- In your video editor, replace only your character's dialogue with the new consistent voice

- Keep the original voices for other characters

- Add ambient sound effects to enhance realism

The Reality Check

Third-party tools like OpenArt, Hailuo, and Kling market themselves as complete solutions for AI video generation. While they can streamline certain aspects of the process, they still require:

- Manual character generation for consistency

- Audio post-processing for voice matching

- Traditional video editing for final assembly

- Significant technical knowledge to use effectively

The truth is that creating polished AI videos requires combining multiple specialized tools, each handling what it does best:

- Image generation for character creation

- Frame generation for scene consistency

- Text-to-video for animation

- Voice synthesis for audio consistency

- Traditional editing for final assembly

The Bottom Line

AI video generation has made remarkable progress, but we're far from the "one-prompt movie" future that headlines suggest. Current tools are powerful components in a larger creative workflow, not magic buttons that replace human creativity and technical skill.

Claims that video editing is dead or that AI will replace filmmakers tomorrow are clickbait at best and false advertising at worst. The reality is that AI video tools augment human creativity rather than replace it, requiring significant skill, patience, and manual work to produce professional results.

What we have today are incredibly powerful tools that, when combined thoughtfully, can produce impressive results. But they're tools that require learning, practice, and creative problem-solving to use effectively.

If you enjoyed this...

You might also like: My AI playlist with even more practical, no-bs tips!