The 10% of AI Tools that Drive 90% of My Results

Hey friends - I use around 10 different AI tools for 90% of my work, and each one excels in one specific area. But figuring out which tool works best for what task usually takes months of trial and error.

So today, I'll share the ONE thing ChatGPT, Gemini, Claude, Perplexity, and NotebookLM does better than alternatives so you walk away with a clear mental model for when to use what. Let's get started!

Watch it in action

Resources

- Essential Power Prompts: A Notion library of 15 battle-tested prompts with video walkthroughs

- HubSpot's "The AI Productivity Stack": A free guide covering 50 tools organized by use case

Key Takeaways

- ChatGPT wins on obedience: Use it when your task has many requirements and missing one breaks everything.

- Gemini wins on multimodality: Use it when you need to process video, audio, images, and text together.

- Claude wins on first-draft quality: Use it when you need working code or polished copy with minimal revision.

Everyday AI

Everyday AI covers the three major general-purpose chatbots: ChatGPT, Gemini, and Claude. These tools seem interchangeable at first glance, but their strengths (i.e. moats) have become quite distinct.

ChatGPT: The Most Obedient Model

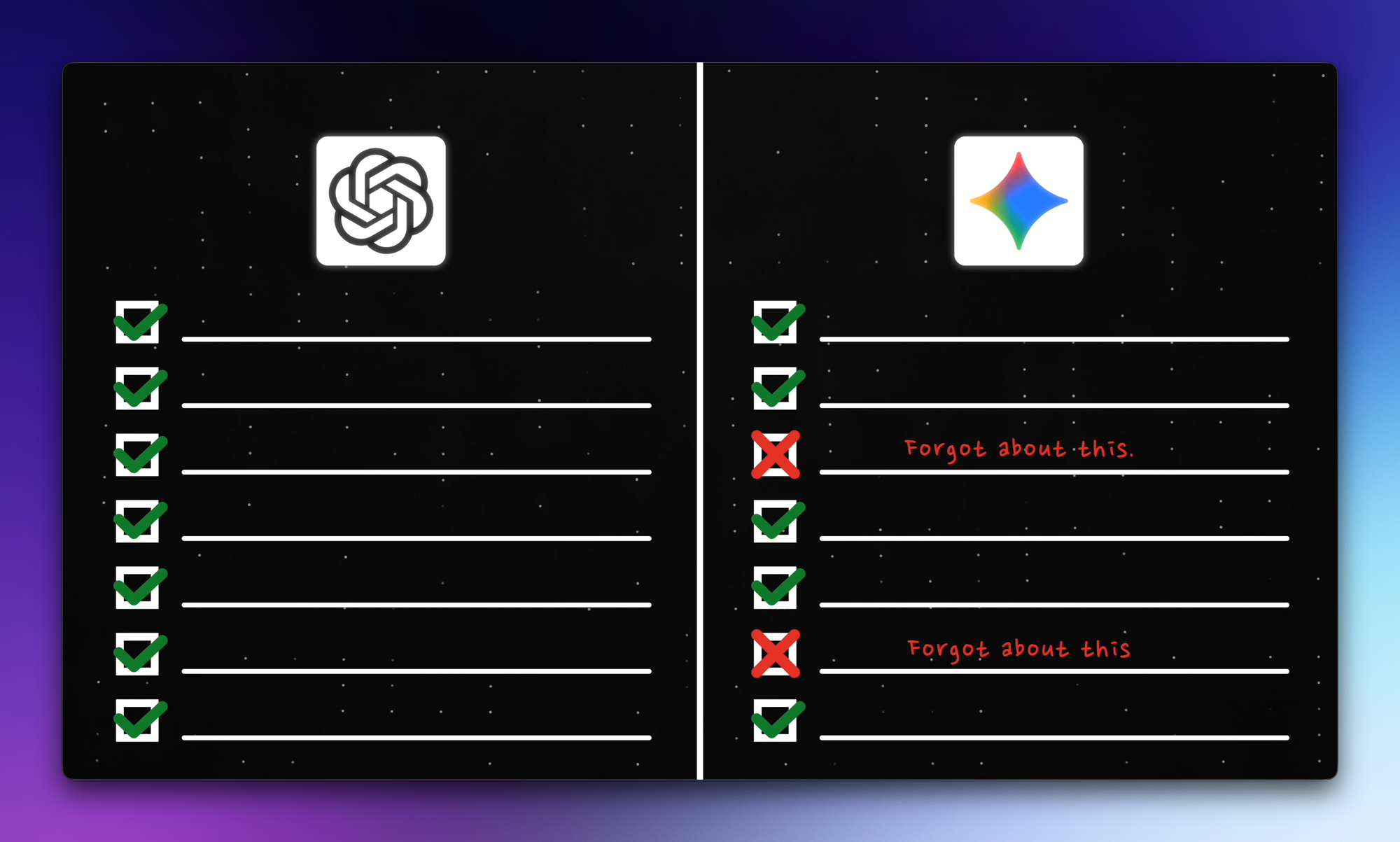

Long story short, ChatGPT drops fewer balls when you hand it a complex checklist. Other models might match its raw intelligence, but give them a lengthy set of instructions and they will sometimes skip a step or decide they know better.

You can test this yourself by asking each model to optimize a rough prompt for itself.

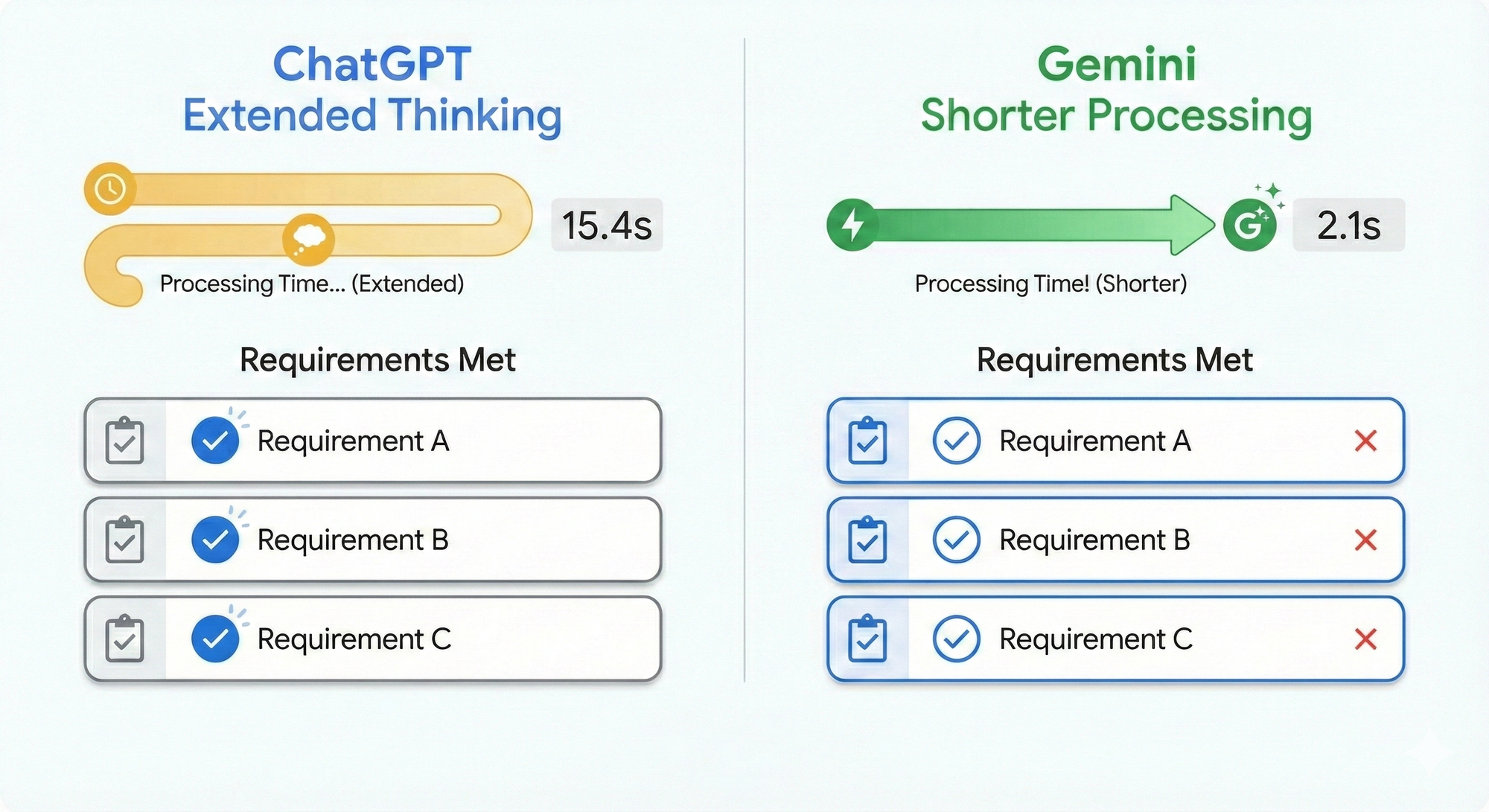

ChatGPT will generate a noticeably longer and more detailed prompt because it knows it can handle the complexity. Run that optimized prompt through both ChatGPT and Gemini, and you will notice ChatGPT "thinks" longer because it actually checks every requirement. Gemini often takes shortcuts.

Here's a real-world example:

- I gave both ChatGPT and Gemini the same complex prompt for a hiring rubric with a dozen requirements. ChatGPT delivered every single one. Gemini's output looked right at first glance, but when I checked it against my original list, it had quietly dropped a few rules.

Another example:

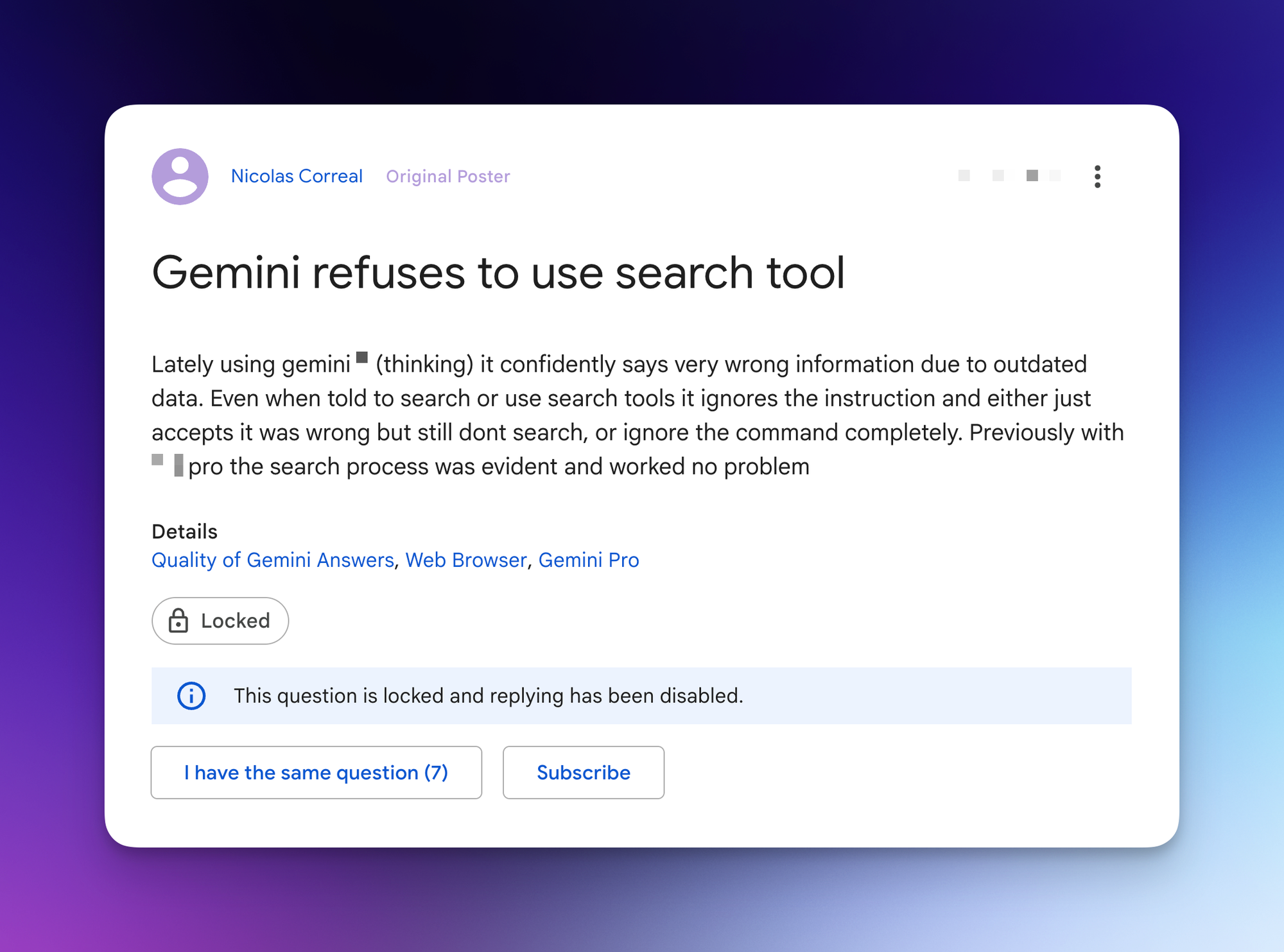

- Sometimes when you explicitly tell Gemini to search the web, it simply does not. This is surprising given that Gemini and Google Search are both Google products. With ChatGPT, when you enable web search, it performs the search every single time.

Gemini: The Multimodal Powerhouse

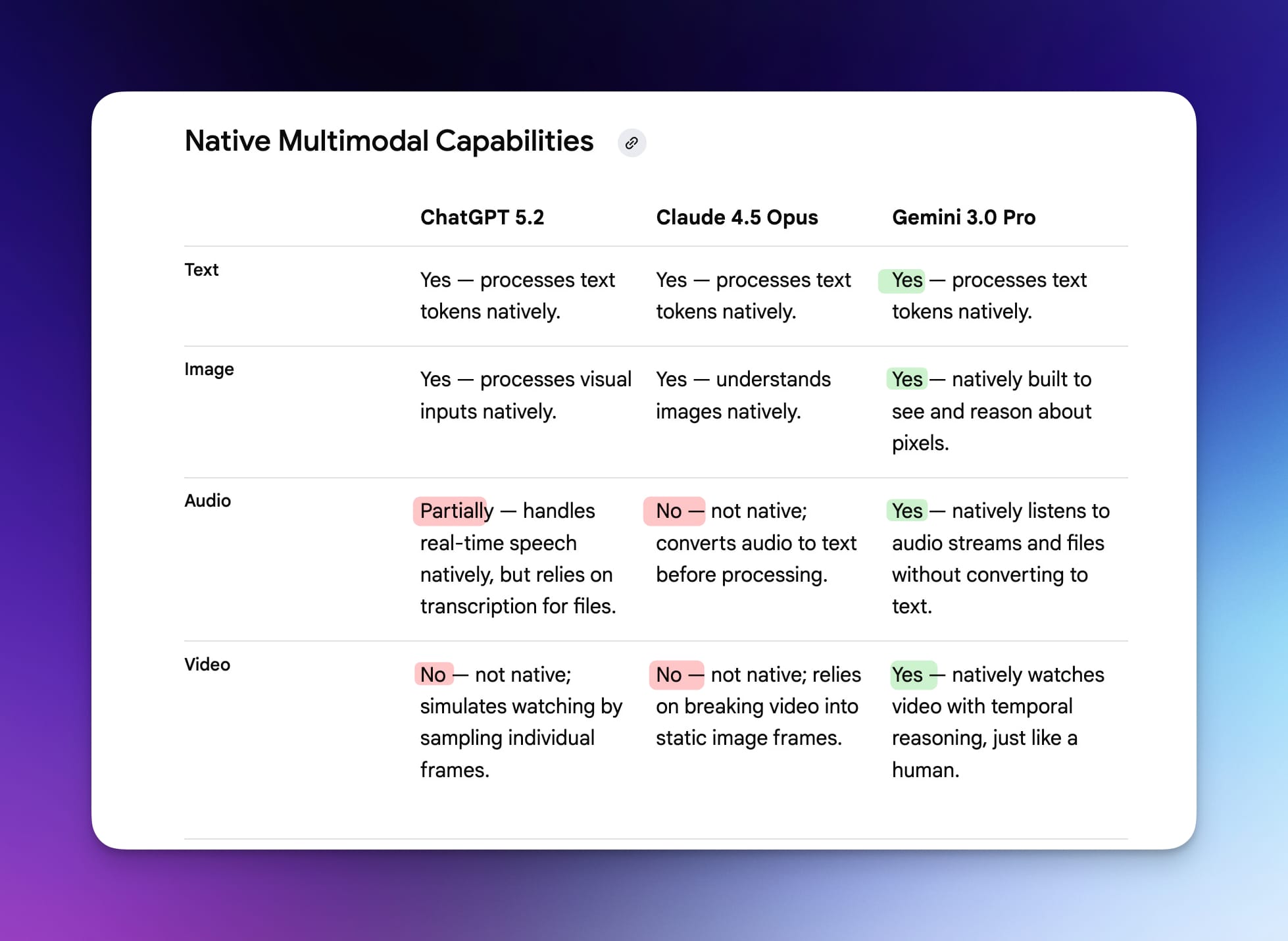

Where ChatGPT wins on obedience, Gemini wins on multimodality. Gemini processes video, audio, images, and text natively. It can "listen" to audio and "watch" videos while ChatGPT and Claude use roundabout methods to access that information.

Gemini's massive 1 million token context window means it can handle large video recordings, hour-long audio files, and full slide decks all together. Other models would choke on that volume.

Consider this scenario:

- You just finished a weekly meeting. You have a video recording of the call, a 20-slide deck, and a photo of a messy whiteboard session. You can upload all three to Gemini and ask it to summarize the discussion, pull out key decisions, and draft the follow-up email. Gemini is the only tool that can synthesize all three in one go.

The tradeoff: Gemini's raw reasoning capabilities sometimes feel slightly behind ChatGPT. But when the task involves video, audio, or massive files, the tradeoff is worth it.

Claude: The First-Draft Champion

Claude's superpower is producing higher-quality first drafts than the other models. Claude's first attempt is usually closer to "done."

This shows up in two areas:

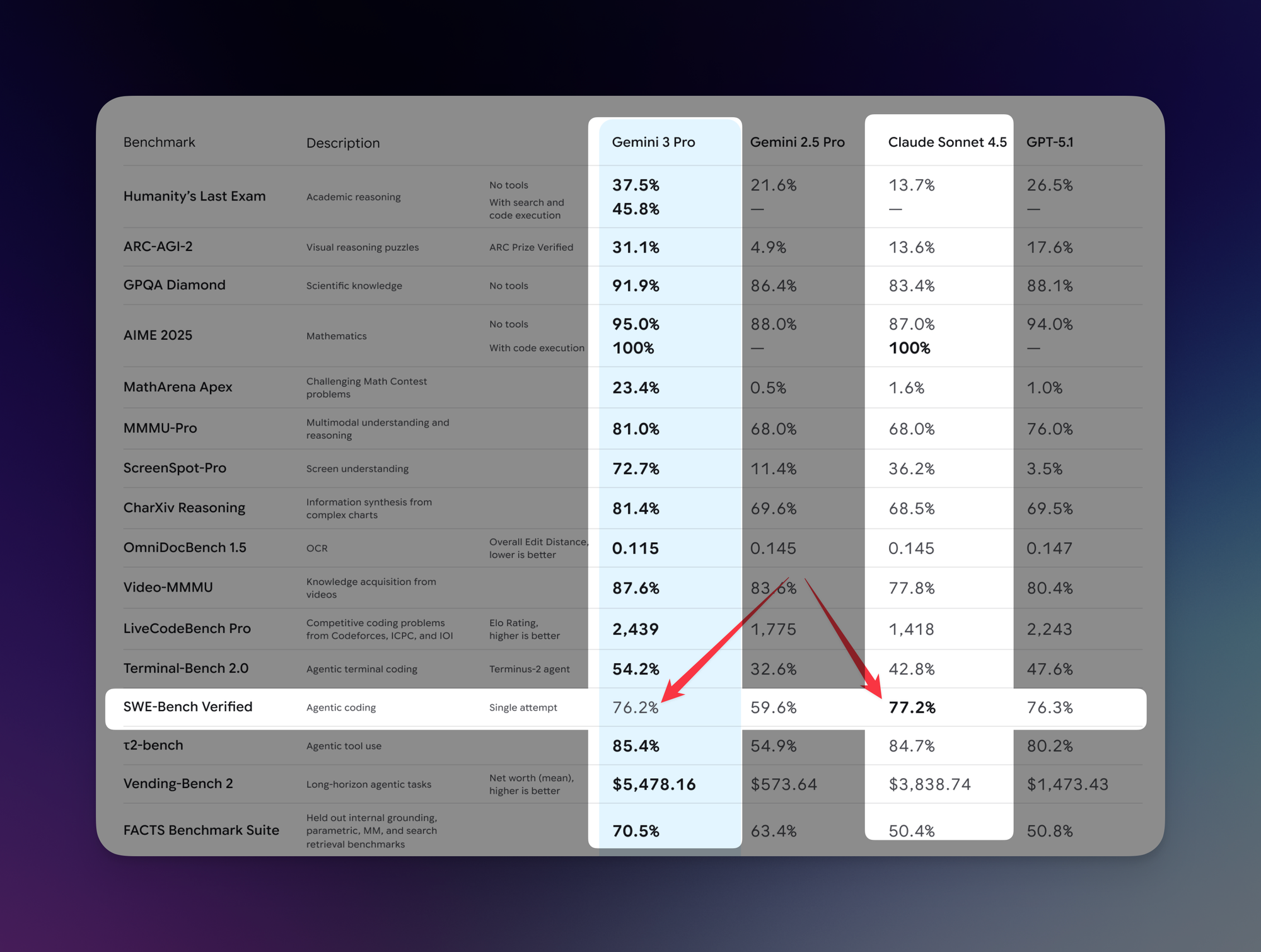

- Coding: The latest version of Gemini beat an older version of Claude on every single benchmark except coding. Anthropic has figured out something about code generation that the others have not. Developers consistently report that Claude writes functional code on the first try more reliably than alternatives.

- Here is a real example: I needed to bulk export conversations from a customer service platform, but their support team said only developers could do it. I described the problem to Claude, and it gave me step-by-step instructions plus a script in Go that worked on the first try. I do not know Go, and I cannot write code.

- Polishing copy: Beyond code, Claude produces written drafts that sound human and need fewer revisions. Specifically, it excels at style matching. Share examples of your existing work, and it replicates your tone almost perfectly.

- When I was in corporate, I shared previous documents so Claude could replicate that voice across presentations and performance reviews. Now as a creator, I feed it my existing YouTube scripts to help refine new drafts.

How These Three Tools Work Together

In practice, ChatGPT or Gemini usually handles the beginning of work: ideation, research, drafting the outline. Claude then handles the last mile, turning rough output into something ready to present or publish.

A note on Grok: People ask why I don't use Grok. 😅

Grok's superpower is direct access to the Twitter/X firehose, making it the best option for analyzing breaking news in real time. If you don't need that...then you don't need Grok! Never use tools just for the sake of using tools. Add them to your toolkit only when they solve an actual problem you have.

Do you need all three? No.

Most people should stick with the paid version of ChatGPT and get really good at it. But if you can afford multiple subscriptions and your workflow can take advantage of their individual superpowers, mix and match as needed.

Specialist AI: Purpose-Built Tools

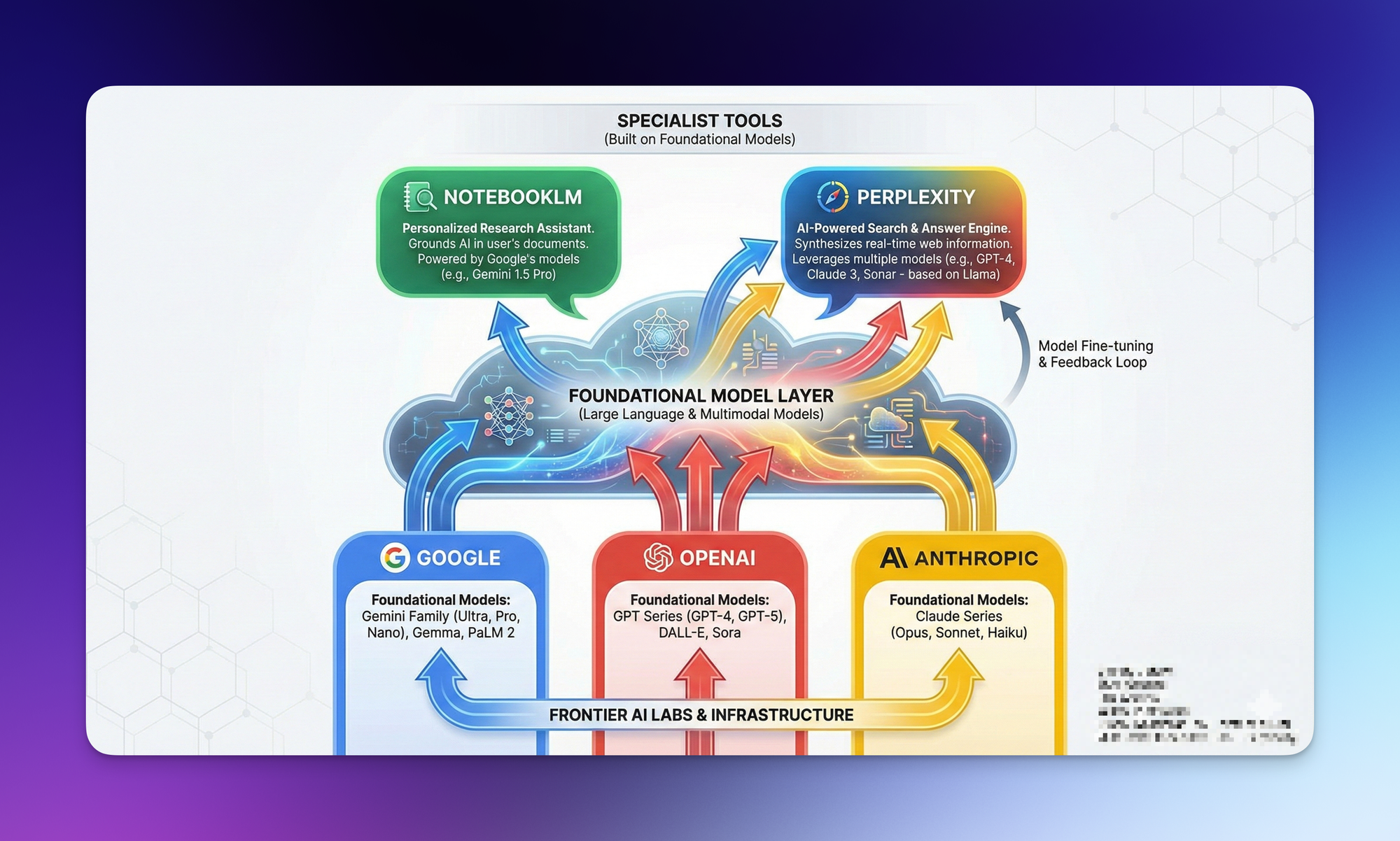

Before we continue, let me clear up a common misconception: Tools like Perplexity are not foundational models.

OpenAI develops the GPT family of models and created ChatGPT as the user-friendly app layer.

Perplexity is different. It fine-tunes existing foundational models for speed and accuracy, optimized specifically for search. Their "Sonar" model, for example, is a fine-tuned version of Meta's open-weight LLaMA model.

Perplexity: Fast, Accurate Information Retrieval

Perplexity's superpower is finding accurate information fast.

General-purpose chatbots are built for reasoning. You use them to help you think, brainstorm, or write a draft. Perplexity is built for fetching. You need a specific fact, and you need it now.

Here's a simple example:

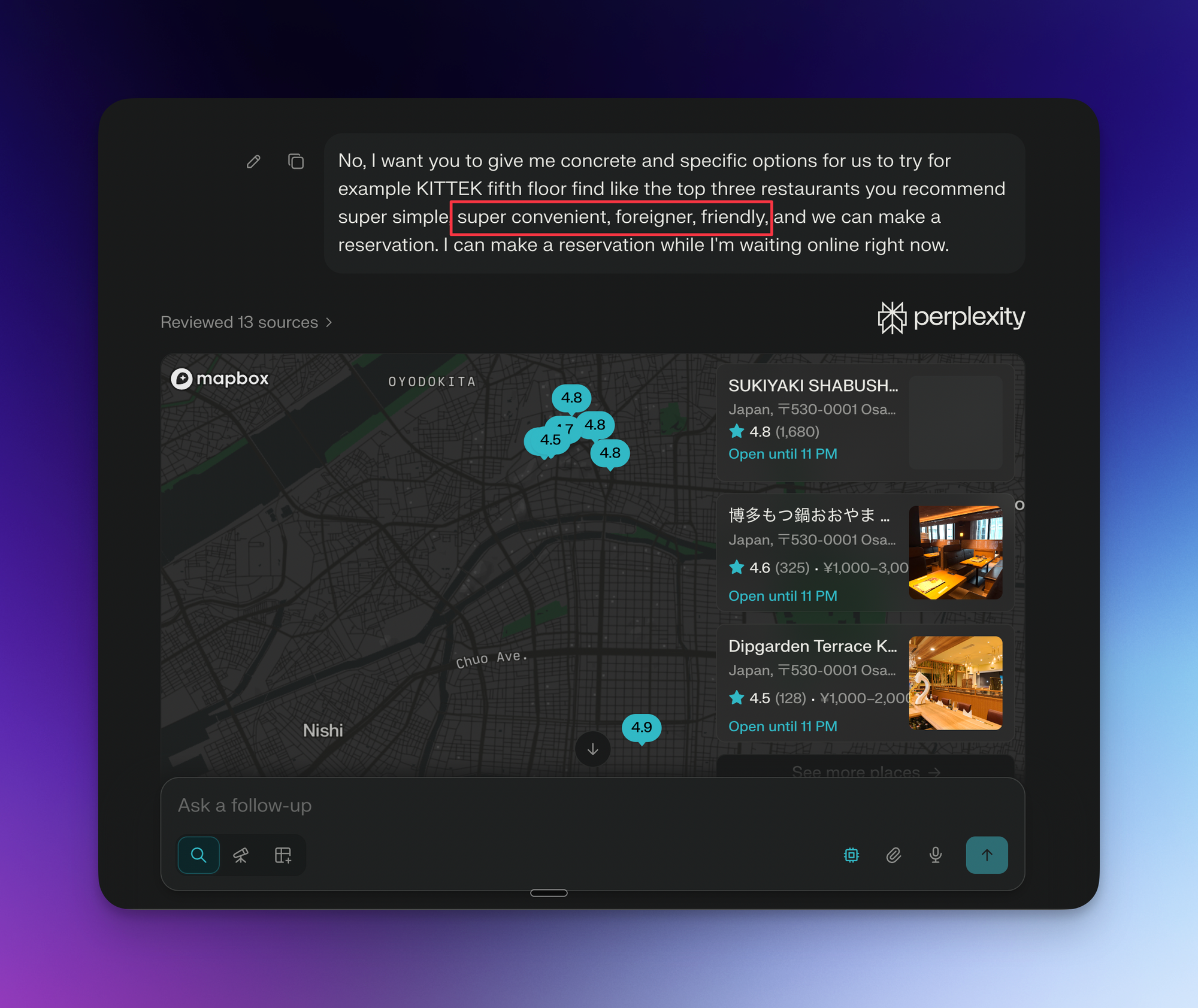

- I used ChatGPT to plan a trip to Japan with my brother because that is a creative task. It requires weighing tradeoffs and building a narrative, and for that kind of task, I am happy to wait while the model thinks.

But when I need "grab and go" information, like whether a specific restaurant is foreigner-friendly because we do not speak Japanese, I want Perplexity to give me accurate information within seconds.

The way to think of of Perplexity is as a replacement for Google AI Mode. Both fetch information. Neither replaces general-purpose chatbots for reasoning tasks.

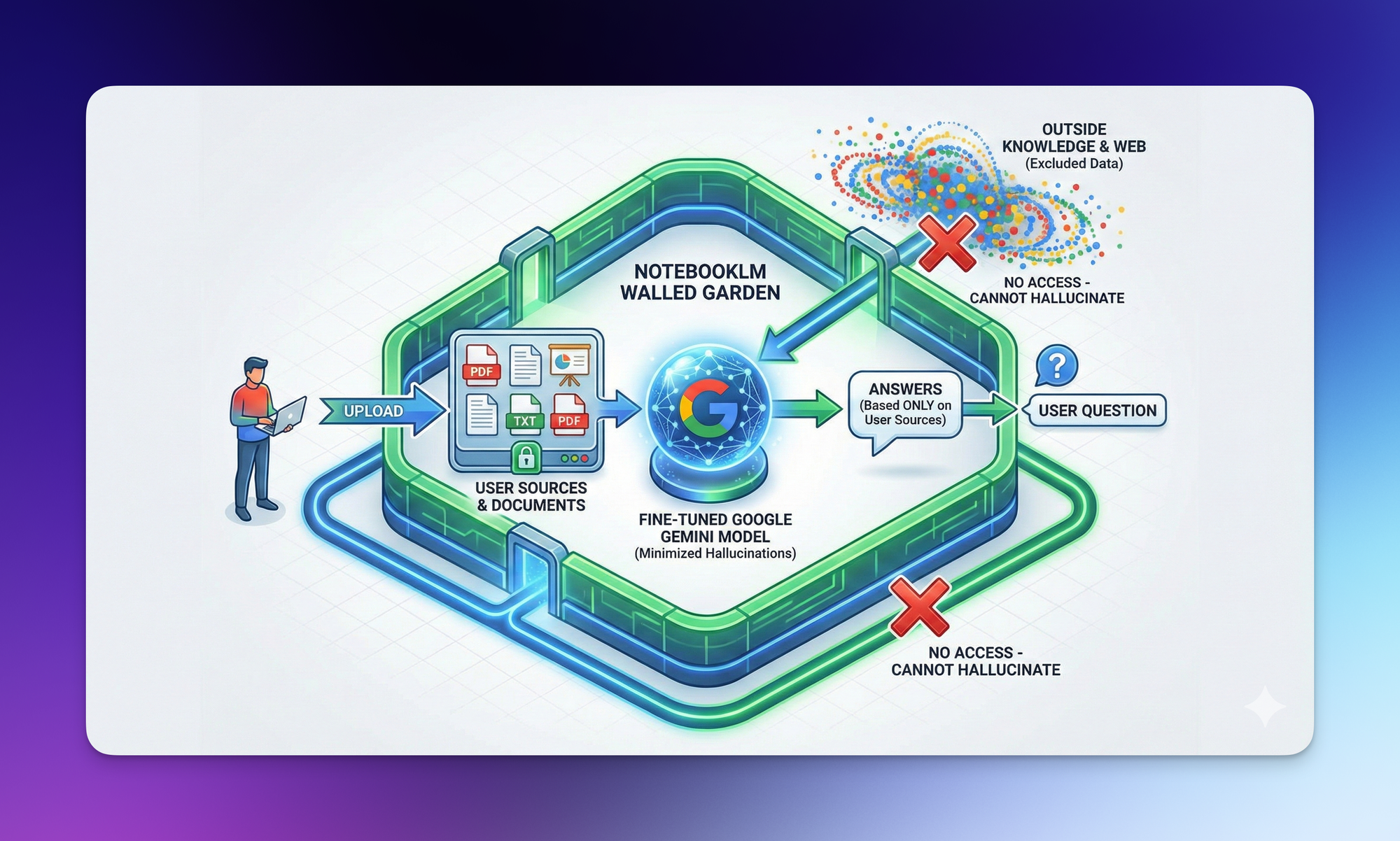

NotebookLM: The Anti-Hallucination Tool

NotebookLM's superpower is that it only answers from the sources you give it, meaning it will not make things up.

Think of it as a walled garden. You upload your sources, and NotebookLM answers questions using only those documents. It cannot hallucinate because it has no outside knowledge to draw from. NotebookLM uses a fine-tuned Google Gemini model specifically optimized to minimize hallucinations.

When I was at Google, before publishing marketing materials, I would upload the final draft alongside the source documents and ask NotebookLM if the draft made any claims that contradicted the sources. It would catch tiny discrepancies other AI might have missed.

I use a similar workflow today for my videos. Before I start filming, I upload my script and all my research into NotebookLM and ask it to flag anything not directly supported by the source material.

The obvious caveat: The output is only as good as the sources you upload. If the sources are incorrect, the output will be confidently incorrect.

Specialist AI: Lightning Round

A few more specialist AI tools deserve mention, even though they do not get daily use:

- Gamma for presentations

- ElevenLabs for voice cloning

- Zapier and n8n for automation

- Excalidraw and Napkin AI for quick visuals

If you enjoyed this...

You might also like my latest tutorial on Gemini!